Stats with Python: Unbiased Variance

January 17, 2021 | 6 min read | 2,971 views

Are you wondering what unbiased sample variance is? Or, why it is divided by n-1? If so, this post answers them for you with a simple simulation, proof, and an intuitive explanation.

Consider you have i.i.d. samples: , and you want to estimate the population mean and the population variance from these samples. The sample mean is defined as:

This looks quite natural. But what about the sample variance? This is defined as:

When I first saw this, it looked weird. Where does come from? The professor said “this term makes the estimation unbiased”, which I didn’t quite understand. But now, thanks to Python, it’s much clearer than it was then. So, in this post, I’ll make a concise and clear explanation of unbiased variance.

Visualizing How Unbiased Variance is Great

Consider a “biased” version of variance estimator:

In fact, as well as unbiased variance, this estimator converges to the population variance as the sample size approaches infinity. However, the “biased variance” estimates the variance slightly smaller.

Let’s see how these esitmators are different. Suppose you are drawing samples, one by one up to 100, from a continuous uniform distribution . The population mean is and the population variance is

I simulated estimating the population variance using the above two estimators in the following code.

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_style("darkgrid")

n = 100

rands = np.random.rand(n)

biased = []

unbiased = []

for i in range(2, n+1):

biased.append(np.var(rands[:i], ddof=0))

unbiased.append(np.var(rands[:i], ddof=1))

x = np.arange(2, n+1)

plt.plot(x, biased, label="Biased")

plt.plot(x, unbiased, label="Unbiased")

plt.hlines(1/12, x[0], x[-1], label="Ground truth")

plt.xlabel("Sample size")

plt.ylabel("Variance")

plt.legend()

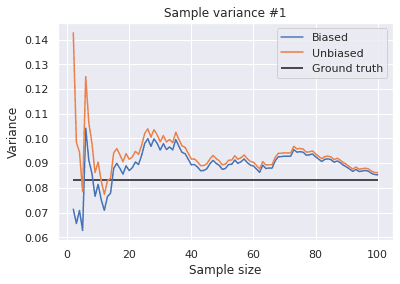

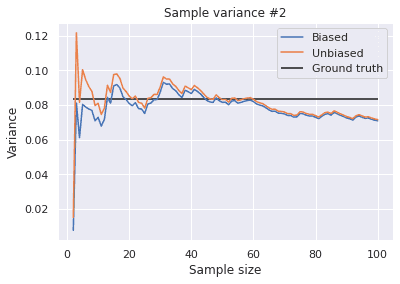

plt.title("Sample variance #1");This code gives different results every time you execute it.

Indeed, both of these estimators seem to converge to the population variance and the biased variance is slightly smaller than the unbiased estimator. However, from these results, it’s hard to see which is more “unbiased” to the ground truth. So, I repeated this experiment 10,000 times and plotted the average performance in the figure below.

n = 100

k = 10000

rands = np.random.rand(n, k)

biased = []

unbiased = []

for i in range(2, n+1):

biased.append(np.var(rands[:i], axis=0, ddof=0).mean())

unbiased.append(np.var(rands[:i], axis=0, ddof=1).mean())

x = np.arange(2, n+1)

plt.plot(x, biased, label="Biased")

plt.plot(x, unbiased, label="Unbiased")

plt.hlines(1/12, x[0], x[-1], label="Ground truth")

plt.xlabel("Sample size")

plt.ylabel("Variance")

plt.legend()

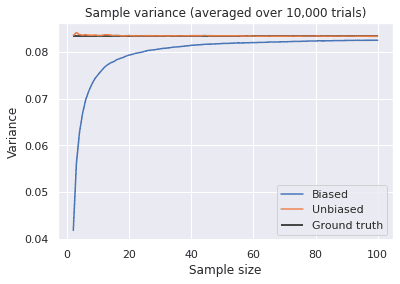

plt.title("Sample variance (averaged over 10,000 trials)");Now it’s clear how the biased variance is biased. Even when there are 100 samples, its estimate is expected to be 1% smaller than the ground truth. In contrast, the unbiased variance is actually “unbiased” to the ground truth.

Proof

Though it is a little complicated, here is a formal explanation of the above experiment. Recall that the variance of the sample mean follows this equation:

Thus,

which means that the biased variance estimates the true variance times smaller. To correct this bias, you need to estimate it by the unbiased variance:

then,

Here, is a quantity called degree of freedom. In the above example, the samples are subject to the equation:

So, given the sample mean , the samples have only degrees of freedom. When I called the function np.var() in the experiment, I specified ddof=0 or ddof=1. This argument is short for delta degree of freedom, meaning how many degrees of freedom are reduced.

Intuition

The bias of the biased variance can be explained in a more intuitive way. By definition, the sample mean is always closer to the samples than the population mean, which leads to the smaller variance estimation if divided by the sample size . For more explanations, I’d recommend this video:

References

[1] 東京大学教養学部統計学教室 編. ”統計学入門“(第9章). 東京大学出版会. 1991.

![[object Object]](/static/2d0f4e01d6e61412b3e92139e5695299/e9fba/profile-pic.png)

Written by Shion Honda. If you like this, please share!