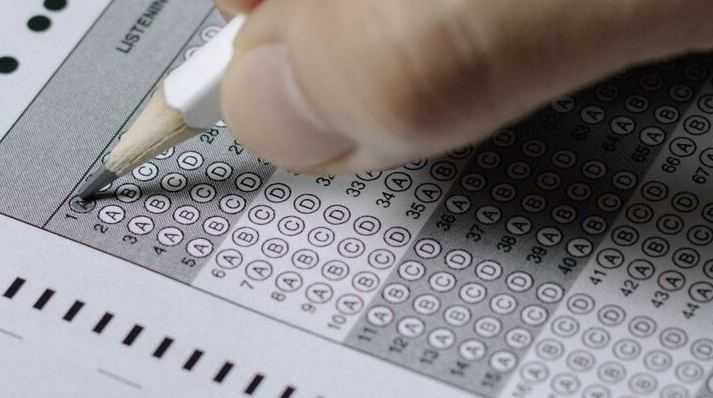

Kaggle Competition Report: Automated Essay Scoring 2.0

July 07, 2024 | 5 min readThis competition was all about distribution shift. Let's learn how the winners conquered the challenge.

Under the sea, in the hippocampus's garden...

![[object Object]](/static/2d0f4e01d6e61412b3e92139e5695299/e9fba/profile-pic.png)

This competition was all about distribution shift. Let's learn how the winners conquered the challenge.

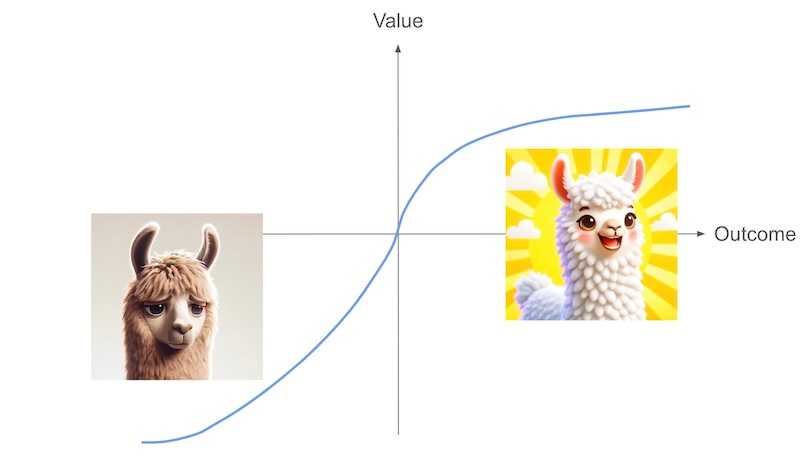

It's hard to collect paired preferences. Can we align LLMs without them? Yes, with KTO!

DPO reduces the effort required to align LLMs. Here is how I created the Reviewer #2 Bot from TinyLlama using DPO.

Can LLMs answer scientific questions? See how Kaggle winners used LLMs and RAG!

What I cannot create, I do not understand. Let's train your own LLM!

How do you invert the text-to-image generation by Stable Diffusion? Let's take a look at the solutions by the winning teams.

A quick guide for RLHF using trlX, OPT-1.5B, and LoRA.

Transformer has undergone various application studies, model enhancements, etc. This post aims to provide an overview of these studies.

This post explains how MobileBERT succeeded in reducing both model size and inference time and introduce its implementation in TensorFlow.js that works on web browsers.