Year in Review: Deep Learning Papers in 2024

December 29, 2024 | 13 min readReflecting on 2024's deep learning breakthroughs! Discover my top 10 favorite research papers that shaped the field this year.

Under the sea, in the hippocampus's garden...

![[object Object]](/static/2d0f4e01d6e61412b3e92139e5695299/e9fba/profile-pic.png)

Reflecting on 2024's deep learning breakthroughs! Discover my top 10 favorite research papers that shaped the field this year.

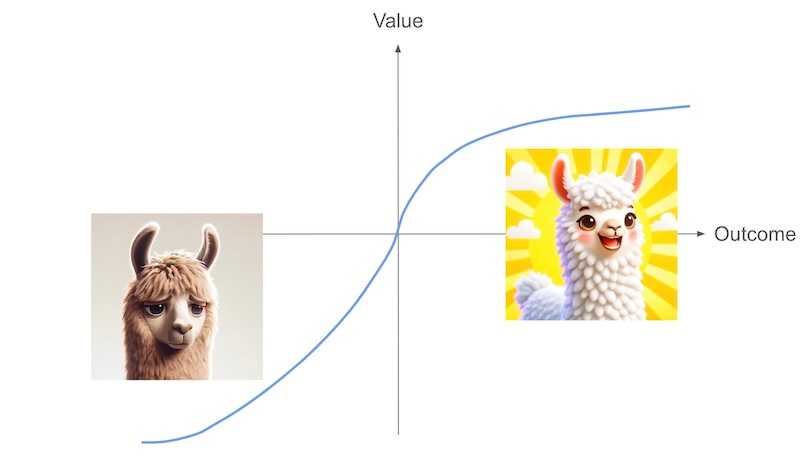

RLHF is not the only method for AI alignment. This article introduces modern algorithms like DPO and KTO that offer simpler and more stable alternatives.

It's hard to collect paired preferences. Can we align LLMs without them? Yes, with KTO!

DPO reduces the effort required to align LLMs. Here is how I created the Reviewer #2 Bot from TinyLlama using DPO.

Let's look back at the significant progress made in deep learning in 2023! Here are my 10 favorite papers.

What I cannot create, I do not understand. Let's train your own LLM!

PyTorch 2.0 introduced a new feature for JIT-compiling. How can it accelerate model training and inference?

A quick guide for RLHF using trlX, OPT-1.5B, and LoRA.

ハイパーパラメータを決めるためのガイドである『Deep Learning Tuning Playbook』をまとめました。

Uncover the top deep learning advancements of 2022. A year-in-review of key research papers and applications.

A collection of images I asked DALL・E 2 to generate.

Let's look back at the updates in deep learning in 2021! This post covers four application projects worth checking out

Let's look back at the updates in deep learning in 2021! This post covers eight research papers worth checking out.

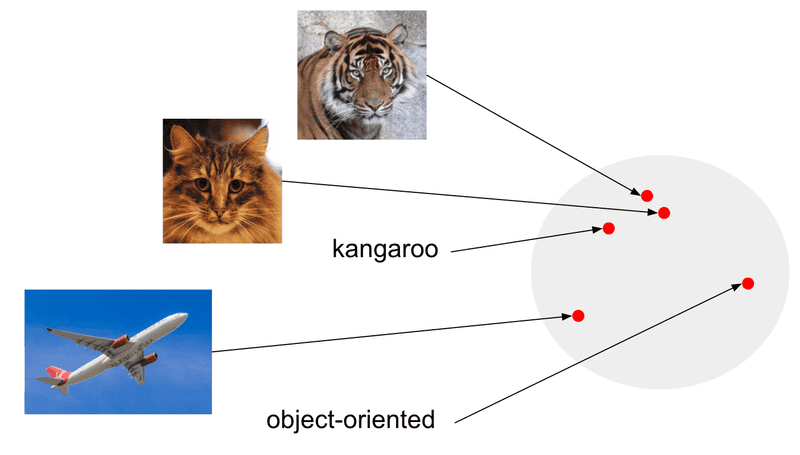

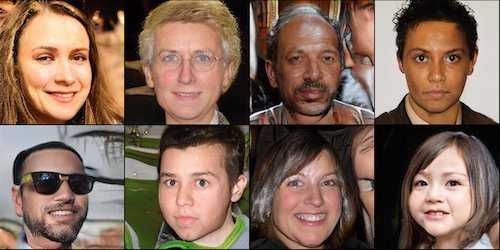

The ability of StyleGAN to generate super-realistic images has been inspiring many application works. To have some sort of organized view on them, this post covers important papers with a focus on image manipulation.

NeurIPS 2020 virtual conference was full of exciting presentations! Here I list some notable ones with brief introductions.

Let's look back on the machine learning papers published in 2020! This post covers 10 representative papers that I found interesting and worth reading.

Transformer has undergone various application studies, model enhancements, etc. This post aims to provide an overview of these studies.

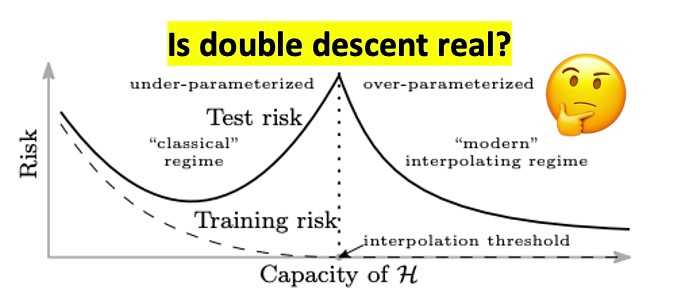

Double descent is one of the mysteries of modern machine learning. I reproduced the main results of the recent paper by Nakkiran et al. and posed some questions that occurred to me.

This post explains how MobileBERT succeeded in reducing both model size and inference time and introduce its implementation in TensorFlow.js that works on web browsers.

"Representation" is a way AIs understand the world. This post is a short introduction to the representation learning in the "deep learning era."

Want to generate realistic images with a single GPU? This post demonstrates how to downsize StyleGAN2 with slight performance degradation.