Awesome StyleGAN Applications

November 12, 2021 | 10 min read | 3,061 views

Since its debut in 2018, StyleGAN attracted lots of attention from AI researchers, artists and even lawyers for its ability to generate super realistic high-resolution images of human faces. At the time of this writing, the original paper [1] has 2,548 citations and its successor StyleGAN2 [2] has 1,065. This means that the number of application works is growing at a dizzying rate. To keep me from getting overwhelmed, I’m writing this post to overlook this research field in an organized way. Of course, it’s impossible to read thousands of papers, so hereafter I’ll focus on the papers that relate to image manipulation including GAN inversion and 3D control.

StyleGANs: A Quick Recap

First of all, let’s briefly recall what StyleGAN was and what the key updates of its subsequent versions were.

StyleGAN

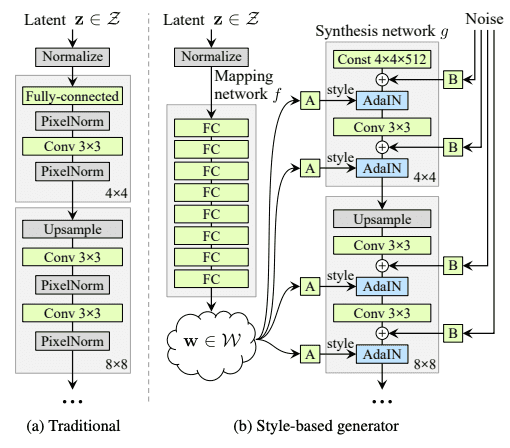

The architecture of the original StyleGAN generator was novel in three ways:

- Generates images in two-stages; first map the latent code to an intermediate latent space with the mapping network and then feed them to each layer of the synthesis network, rather than directly inputs the latent code to the first layer only.

- Applies AdaIN [3] layers iteratively to capture the intermediate latent code as “styles”.

- Injects explicit noise inputs to deal with stochastic variations such as hair and freckles.

These are best described in the figure below.

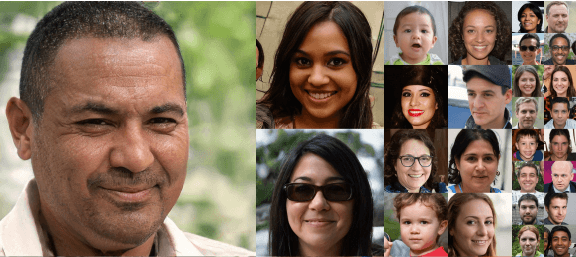

With these features combined, StyleGAN can generate images that are almost impossible to discriminate for humans.

This quality was so amazing that many people rushed to train StyleGAN with their own datasets to generate cats, ukiyoe, Pokémons, and more (see Awesome Pretrained StyleGAN for details).

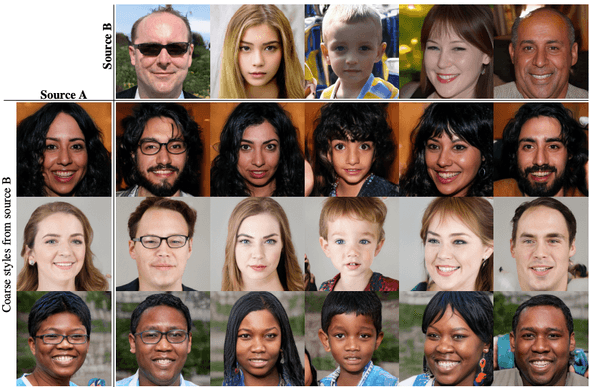

StyleGAN has another interesting feature called ”style mix”. With two latent codes and (and corresponding and ), one can switch the inputs from to in the middle of the synthesis network and get a mixed image that has B’s coarse styles and A’s fine styles.

StyleGAN2

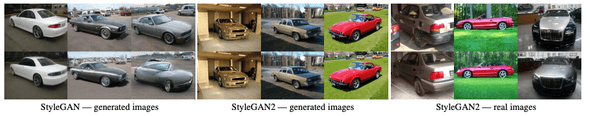

The human face images generated by StyleGAN look convincing enough, but if you have a careful look, you may notice some unnatural artifacts. In StyleGAN2 [2], some architectural optimizations were made to StyleGAN to facilitate even more realistic generation, though I don’t go into the technical details here.

One of the most important updates of StyleGAN2 is that by regularizing the perceptual path length, it became easier to invert. Now it is possible to encode a given image (either generated or real) into the intermediate style space .

This paved the way for GAN inversion — projecting an image to the GAN’s latent space where features are semantically disentangled, as is done by VAE. As we’ll see in the next section, StyleGAN2 is currently the most widely used version in terms of the number of application works.

StyleGAN3 (Alias-Free GAN)

In June 2021, the Tero Karras team published Alias-Free GAN (later named StyleGAN3) to address the undesirable aliasing effect that leads to some details glued to the absolute coordinates of the image [4].

A video is worth a thousand words. The official video clearly demonstrates the “texture sticking” issue and how StyleGAN3 solves it perfectly. Now it can be trained on unaligned images like FFHQ-U. For StyleGAN3 applications? There are only a few yet.

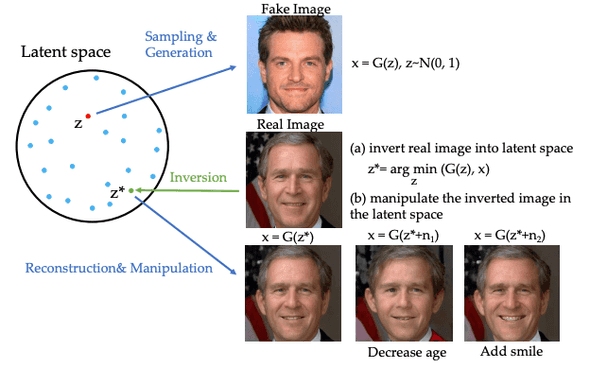

StyleGAN Inversion

GAN inversion is a technique to invert a given image back to the latent space, where semantic editing is easily done. For example, when you get a latent code of your portrait , you can generate a younger version by adding the “decrease age” vector and feeding it to the generator.

There are three approaches to GAN inversion:

- Optimization-based methods use an iterative algorithm, such as gradient descent, to search for the latent code that minimizes the reconstruction loss. They generally yield better reconstruction results but take a much longer time due to the iterative process.

- Learning-based methods train an encoder that inverts images to the latent space. They are generally faster and less accurate than their optimization-based counterparts.

- Hybrid methods of these two. Typically, they first invert a given image to the latent code with a learning-based encoder and then optimize it further. This is expected to reduce the time for optimization and the quality of inversion.

Let’s have a look at some examples.

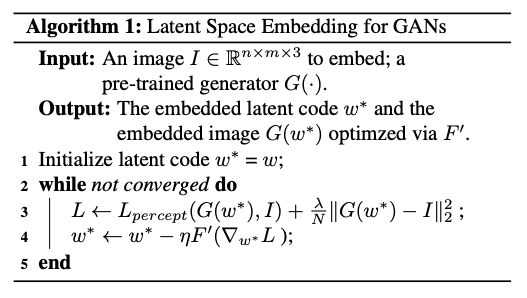

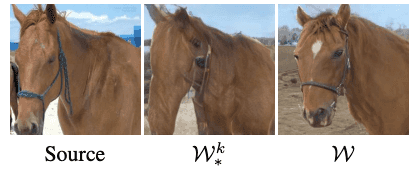

Image2StyleGAN

Image2StyleGAN [6] is a simple but effective implementation of an optimization-based approach. It minimizes the sum of the perceptual loss and L2 loss to get the embedded latent code.

The authors noticed that the 512-dimensional intermediate latent space is not informative enough to restore the input image. So, they extended the latent space to , a concatenation of 18 different vectors, one for each input to the StyleGAN’s AdaIN layers. The resulting space is well disentangled and allows multiple face image editing applications, including style transfer and expression transfer. This feature was further explored in Image2StyleGAN++ [7].

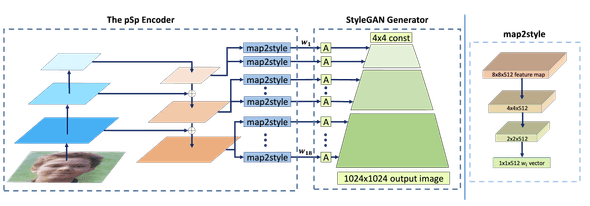

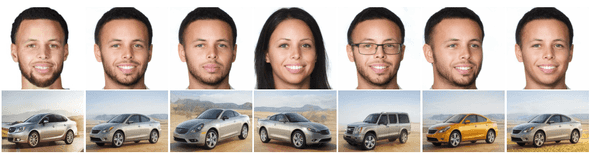

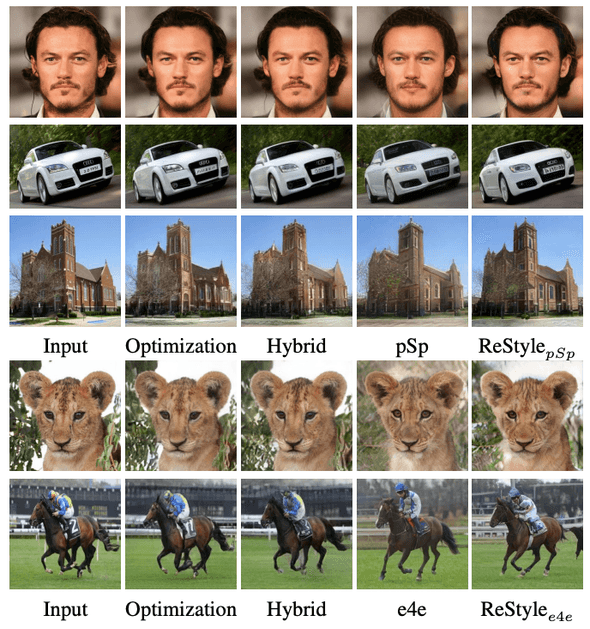

pSp

The learning-based approach pSp (pixel2style2pixel) is a general image-to-image translation framework [8]. The encoder is trained to minimize the reconstruction loss (the sum of L2, LPIPS, identity, and latent code regularization loss) and directly translates the input image to the extended latent code ().

The pSp encoder can be trained on images not represented in the StyleGAN domain. In that way, pSp can generate images conditioned on inputs like sketches and segmentation masks.

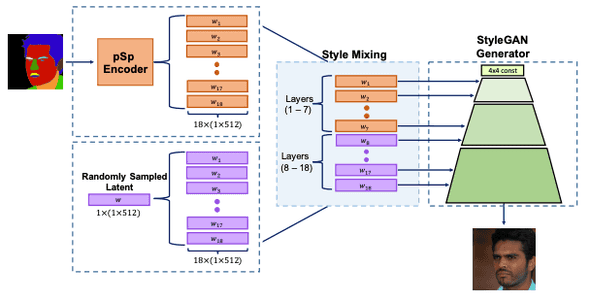

e4e

e4e (Encoder for Editing) is a learning-based encoder specifically designed for semantic editing after inversion [9].

First, the authors summarized the variations of the intermediate latent space and the extended . They use notation for the layer extension and explicitly denote when it is not the equal distribution to . Now, four variations listed in the table below should be considered. The conventional space is now or depending on its distribution.

| Individual style codes are limited to | Same style code in all layers | |

|---|---|---|

| ✅ | ✅ | |

| ✅ | ||

| ✅ | ||

Based on the above definition, the authors shed light on distortion-editability tradeoff and distortion-perception tradeoff. That is, inverting to space leads to less distortion (less reconstruction loss) than inverting to , but it is also less realistic and harder to edit results. The figure below depicts the distortion-perception tradeoff.

e4e employs the pSp’s architecture but has control of these tradeoffs by putting closer to and . The editability-aware encoder achieves good reconstruction and semantic editing at the same time.

ReStyle

To improve the reconstruction accuracy of pSp and e4e, ReStyle is tasked with predicting a residual of the current estimate to the target [10]. It works with a negligible increase in inference time.

Semantic Editing

Once the latent code is obtained, we can semantically edit the original image by moving it to a certain direction in the latent space. There are mainly two types of input:

- Two images (source and reference), possibly with additional instructions including masks or attribute indices.

- An image and an attribute index.

To put it simply, the former is more like a copy-pasting, while the latter attempts to control the output proactively. Plus, the latter assumes that the edits are made multiple times interactively.

In this area, it is usually assumed that StyleGAN inversion algorithms are given.

Image2StyleGAN++

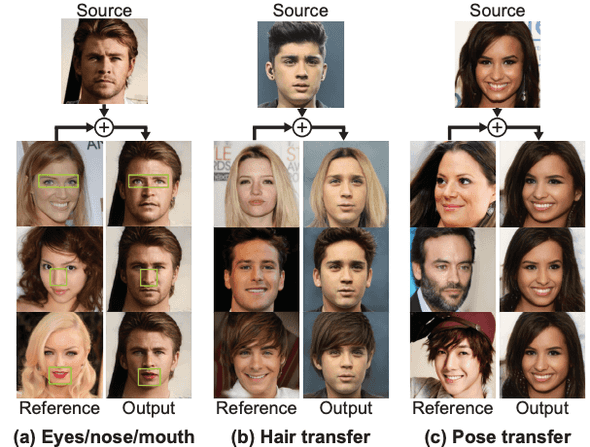

Image2StyleGAN++ extends Image2StyleGAN to take spatial masks as input to allow local editing [7]. For example, it can perform image crossover:

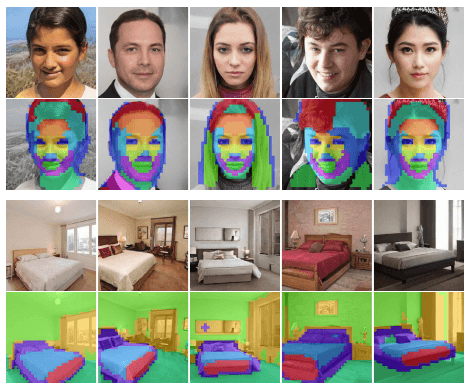

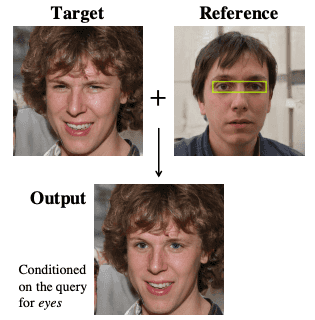

Editing in Style

Thanks to the well-disentangled latent space, the k-means clustering of the hidden layer activations of the StyleGAN generator provides an interesting insight: the clusters are semantically meaningful even they were decomposed in an unsupervised way [11].

Then, the contribution matrix (each element is the contribution of channel to cluster ) can be used to determine the style mixing coefficients. This enables to make a local edit (e.g., swapping eyes).

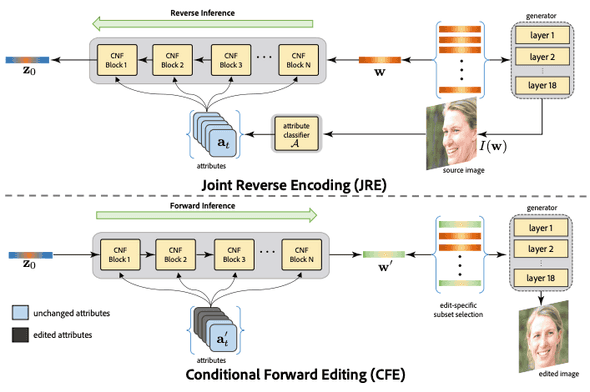

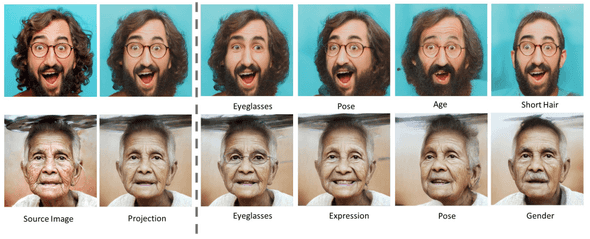

StyleFlow

To facilitate more fine-grained and editable encoding, StyleFlow replaces the fully connected layers in the mapping network with attribute-aware continuous normalizing flow (CNF) blocks [12].

The results are impressive. The two source images are real images (i.e., not generated by StyleGAN), and StyleFlow successfully translates these extreme samples.

StyleSpace Analysis

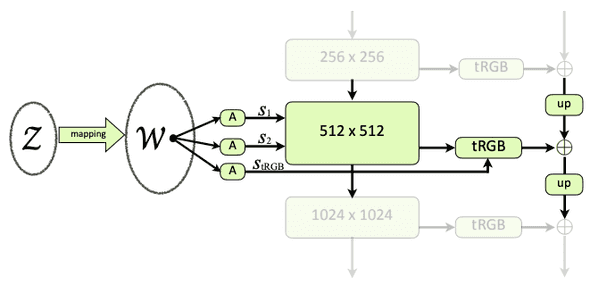

Wu et al. quantitatively analyzed how disentangled StyleGAN’s latent spaces are. Although the extended intermediate latent space is the standard choice, they discovered that the style space is actually more disentangled than [13]. As depicted in the figure below, the style space is spanned by a concatenation of affined intermediate latent codes.

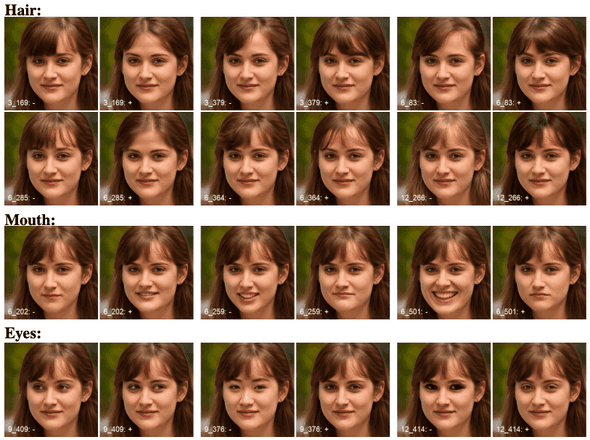

The images below demonstrate how well the style space is disentangled. Each pair is the result of decreasing (-) and increasing (+) a single element of the style code. The numbers in the bottom left corner indicate the layer index and the channel index. Note that the base image is a fake one generated by StyleGAN2.

Retrieve in Style

Retrieve in Style extended Editing in Style to edit more global features such as pose and hairstyle [14].

As the title suggests, the authors also provide an algorithm for attribute-guided image retrieval.

3D Control

Among several types of semantic edits, reposing is the most difficult task as it often requires drastic and global changes. Thus, some works explicitly estimate 3D information and use it to perform visually natural reposing.

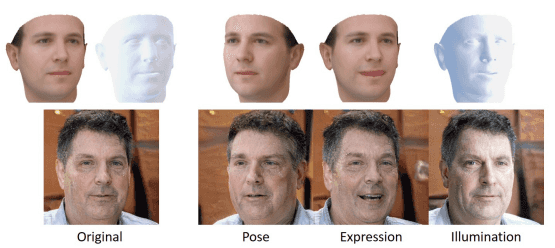

StyleRig

StyleRig can control human face images generated by StyleGAN like a face rig, by translating the semantic editing on 3D meshes by RigNet [16] to the latent code of StyleGAN [15]. Here, StyleGAN is supposed to be pre-trained and RigNet can be trained in a self-supervised manner.

Pose with Style

Pose with Style exploits StyleGAN2 to perform human reposing [17]. The target pose information is used as input, and the original image and coordinate information are injected as style. This algorithm is also applicable to the virtual try-on task.

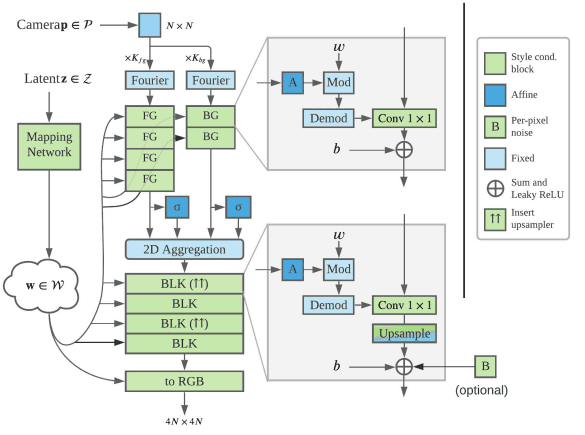

StyleNeRF

StyleNeRF integrates StyleGAN2 and NeRF (neural radiance field) [19] to generate 3D-consistent high-resolution images [18]. The inputs to the synthesis network are the intermediate latent code and the low-resolution feature map generated by NeRF from the camera pose .

The figure below shows how StyleNeRF enjoys the benefits from both NeRF’s 3D consistency and StyleGAN2’s perception quality.

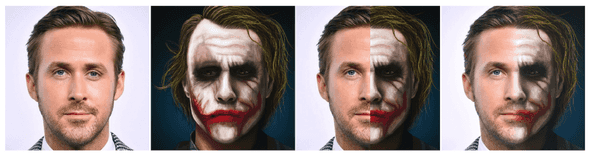

Text-guided Manipulation

Last but not least, text-guided manipulation is an interesting application of StyleGAN.

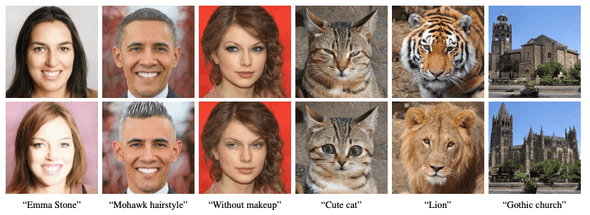

StyleCLIP

Based upon CLIP (contrastive language-image pretraining) [21], the state-of-the-art text-to-image generator, StyleCLIP finds the optimal direction to move forward in the latent space so that the input image will be edited according to the instruction [20].

A wide variety of text instructions can be accepted. Since CLIP has certain knowledge about proper nouns, it is possible to edit a face to look like “Emma Stone”.

Concluding Remarks

StyleGAN had such a great impact that its applications are growing fast. I hope this article will give you some sort of perspective on them.

Thanks for reading. As always, feedback is welcomed.

References

[1] Tero Karras, Samuli Laine, Timo Aila. ”A Style-Based Generator Architecture for Generative Adversarial Networks“. CVPR. 2019.

[2] Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, Timo Aila. ”Analyzing and Improving the Image Quality of StyleGAN

“. CVPR. 2020.

[3] Xun Huang, Serge Belongie. ”Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization“. ICCV. 2017.

[4] Tero Karras, Miika Aittala, Samuli Laine, Erik Härkönen, Janne Hellsten, Jaakko Lehtinen, Timo Aila. ”Alias-Free Generative Adversarial Networks“. NeurIPS. 2021.

[5] Weihao Xia, Yulun Zhang, Yujiu Yang, Jing-Hao Xue, Bolei Zhou, Ming-Hsuan Yang. ”GAN Inversion: A Survey”. 2021.

[6] Rameen Abdal, Yipeng Qin, Peter Wonka. ”Image2StyleGAN: How to Embed Images Into the StyleGAN Latent Space?“. ICCV. 2019.

[7] Rameen Abdal, Yipeng Qin, Peter Wonka. ”Image2StyleGAN++: How to Edit the Embedded Images?“. CVPR. 2020.

[8] Elad Richardson, Yuval Alaluf, Or Patashnik, Yotam Nitzan, Yaniv Azar, Stav Shapiro, Daniel Cohen-Or. ”Encoding in Style: A StyleGAN Encoder for Image-to-Image Translation“. CVPR. 2021.

[9] Omer Tov, Yuval Alaluf, Yotam Nitzan, Or Patashnik, Daniel Cohen-Or. ”Designing an Encoder for StyleGAN Image Manipulation“. SIGGRAPH. 2021.

[10] Yuval Alaluf, Or Patashnik, Daniel Cohen-Or. ”ReStyle: A Residual-Based StyleGAN Encoder via Iterative Refinement“. ICCV. 2021.

[11] Edo Collins, Raja Bala, Bob Price, Sabine Süsstrunk. ”Editing in Style: Uncovering the Local Semantics of GANs“. CVPR. 2020.

[12] Rameen Abdal, Peihao Zhu, Niloy Mitra, Peter Wonka. ”StyleFlow: Attribute-conditioned Exploration of StyleGAN-Generated Images using Conditional Continuous Normalizing Flows“. SIGGRAPH. 2021.

[13] Zongze Wu, Dani Lischinski, Eli Shechtman. ”StyleSpace Analysis: Disentangled Controls for StyleGAN Image Generation“. CVPR. 2021.

[14] Min Jin Chong, Wen-Sheng Chu, Abhishek Kumar, David Forsyth. ”Retrieve in Style: Unsupervised Facial Feature Transfer and Retrieval”. 2021.

[15] Ayush Tewari, Mohamed Elgharib, Gaurav Bharaj, Florian Bernard, Hans-Peter Seidel, Patrick Pérez, Michael Zollhöfer, Christian Theobalt. ”StyleRig: Rigging StyleGAN for 3D Control over Portrait Images“. CVPR. 2020.

[16] Zhan Xu , Yang Zhou , Evangelos Kalogerakis , Chris Landreth , Karan Singh. ”RigNet: Neural Rigging for Articulated Characters“. SIGGRAPH. 2020.

[17] Badour AlBahar, Jingwan Lu, Jimei Yang, Zhixin Shu, Eli Shechtman, Jia-Bin Huang. ”Pose with Style: Detail-Preserving Pose-Guided Image Synthesis with Conditional StyleGAN“. SIGGRAPH Asia. 2021.

[18] Jiatao Gu, Lingjie Liu, Peng Wang, Christian Theobalt. ”StyleNeRF: A Style-based 3D-Aware Generator for High-resolution Image Synthesis”. 2021.

[19] Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, Ren Ng. ”NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis“. ECCV. 2020.

[20] Or Patashnik, Zongze Wu, Eli Shechtman, Daniel Cohen-Or, Dani Lischinski. ”StyleCLIP: Text-Driven Manipulation of StyleGAN Imagery“. ICCV. 2021.

[21] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, Ilya Sutskever. ”Learning Transferable Visual Models From Natural Language Supervision“. ICML. 2021.

![[object Object]](/static/2d0f4e01d6e61412b3e92139e5695299/e9fba/profile-pic.png)

Written by Shion Honda. If you like this, please share!