Book Review: Database Internals

April 27, 2025 | 10 min readA deep dive into how databases work.

Under the sea, in the hippocampus's garden...

![[object Object]](/static/2d0f4e01d6e61412b3e92139e5695299/e9fba/profile-pic.png)

A deep dive into how databases work.

Learn how to extend Claude's capabilities by building your own Model Context Protocol server.

A detailed guide on how to build applications with foundation models.

Ordinal regression has order structure between classes and there are dedicated loss functions to use this information.

Reflecting on 2024's deep learning breakthroughs! Discover my top 10 favorite research papers that shaped the field this year.

RLHF is not the only method for AI alignment. This article introduces modern algorithms like DPO and KTO that offer simpler and more stable alternatives.

This competition was all about distribution shift. Let's learn how the winners conquered the challenge.

It's hard to collect paired preferences. Can we align LLMs without them? Yes, with KTO!

DPO reduces the effort required to align LLMs. Here is how I created the Reviewer #2 Bot from TinyLlama using DPO.

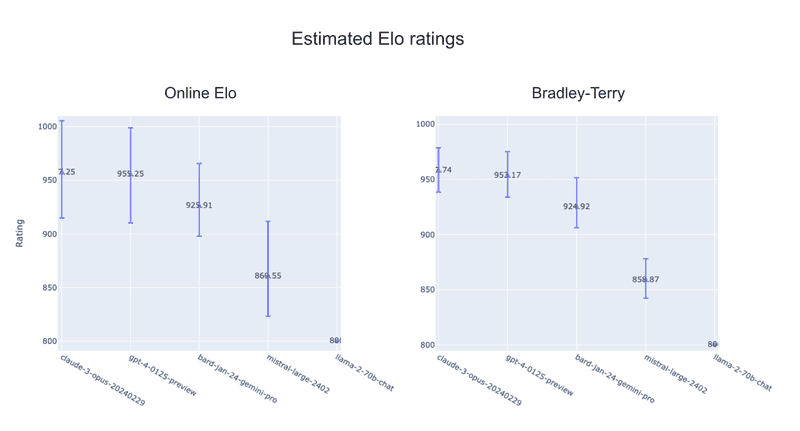

Chatbot Arena updated its LLM ranking method from Elo to Bradley-Terry. What changed? Let's dig into the differences.

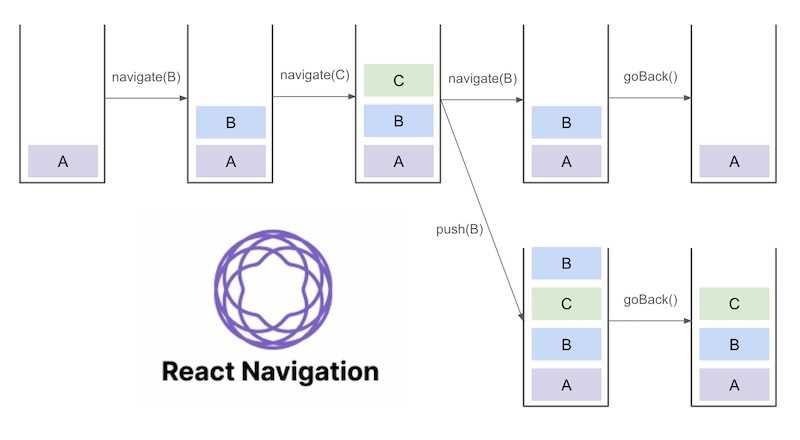

Does your React Native app go back to an unexpected screen? Here's how to deal with it.

Let's look back at the significant progress made in deep learning in 2023! Here are my 10 favorite papers.

Can LLMs answer scientific questions? See how Kaggle winners used LLMs and RAG!

Discover the power of Flask's Server-Sent Events for better developer's experience of chatbots.

What I cannot create, I do not understand. Let's train your own LLM!

How do you invert the text-to-image generation by Stable Diffusion? Let's take a look at the solutions by the winning teams.

PyTorch 2.0 introduced a new feature for JIT-compiling. How can it accelerate model training and inference?

Learn how to easily obtain a list of email addresses for team members.

A quick guide for RLHF using trlX, OPT-1.5B, and LoRA.

Uncover the top deep learning advancements of 2022. A year-in-review of key research papers and applications.

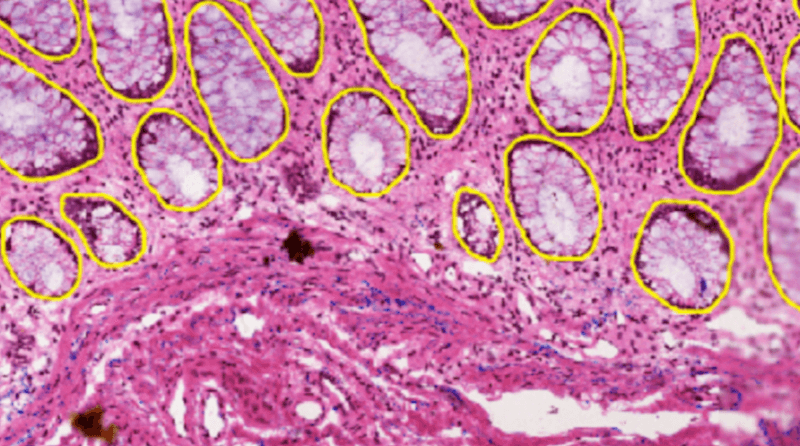

HuBMAP + HPA was a competition on image segmentation with a twist in how to split the dataset. How did winners approach this problem?

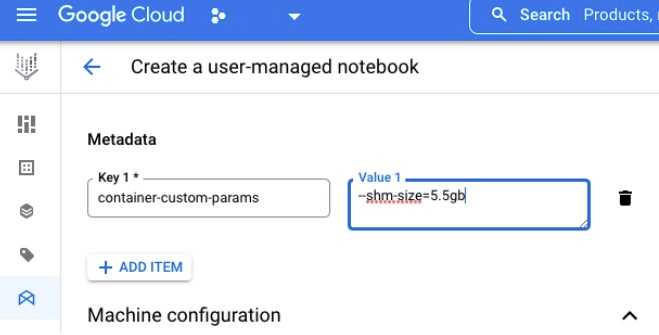

If you want more shm in Workbench, specify shm size in the "Metadata" pane when creating a notebook.

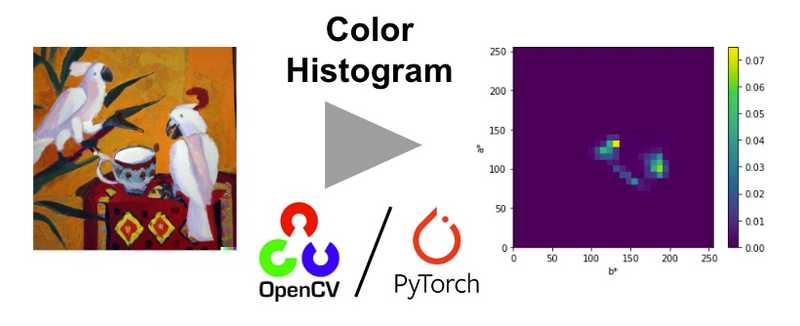

Two ways to calculate color histogram: OpenCV-based and PyTorch-based.

A collection of images I asked DALL・E 2 to generate.

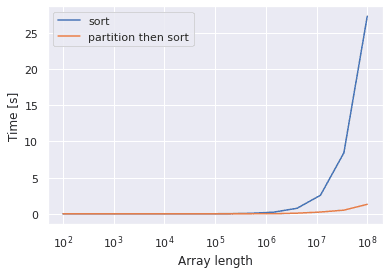

An optimized NumPy implementation of top-k function.

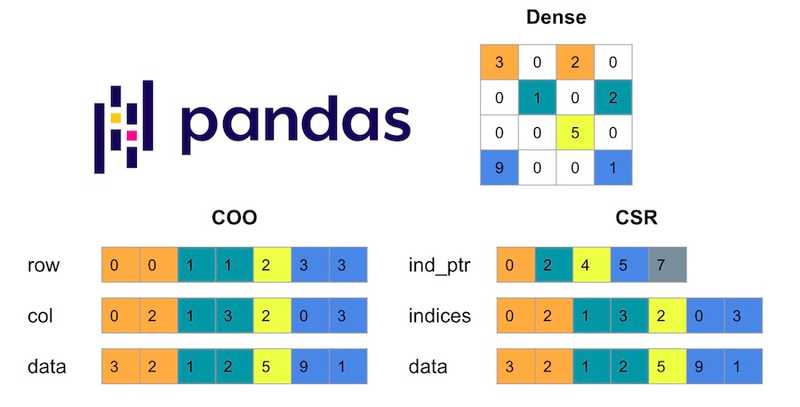

This post shows how to convert a DataFrame of user-item interactions to a compressed sparse row (CSR) matrix, the most common format for sparse matrices.

Let's look back at the updates in deep learning in 2021! This post covers four application projects worth checking out

Let's look back at the updates in deep learning in 2021! This post covers eight research papers worth checking out.

The ability of StyleGAN to generate super-realistic images has been inspiring many application works. To have some sort of organized view on them, this post covers important papers with a focus on image manipulation.

The Kaggle SETI competition has ended. Did kagglers succeed in finding aliens? Let's see.

It's so easy for me to forget how to setup Jupyter in a newly created Poetry / Pipenv environment. So, here it is.

This post shows you how to set up a cheap and comfortable computing environment for Kaggle using Colab Pro and Google Drive. Happy Kaggling!

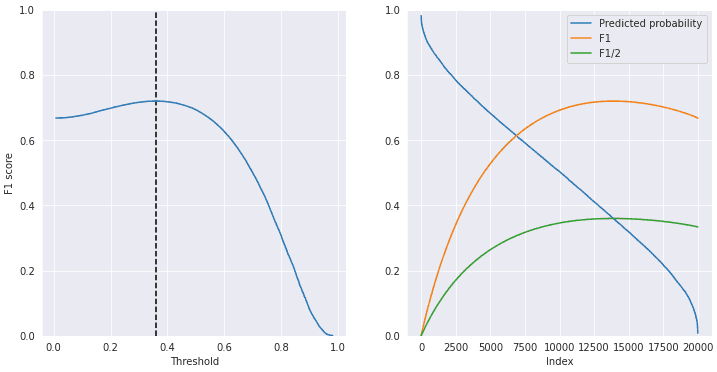

This post attempts to take a deeper look at F1 score. Do you know that, for calibrated classifiers, the optimal threshold is half the max F1? How come? Here it's explained.

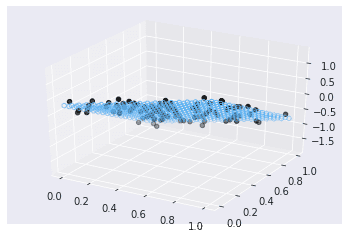

This post steps forward to multiple linear regression. The method of least squares is revisited --with linear algebra.

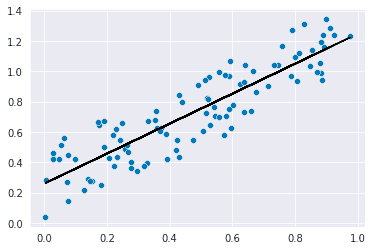

This post summarizes the basics of simple linear regression --method of least squares and coefficient of determination.

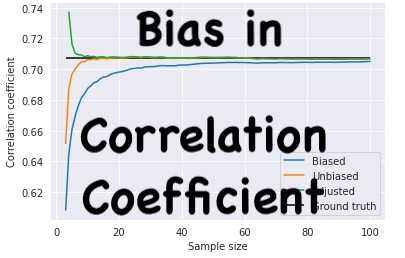

Is the sample correlation coefficient an unbiased estimator? No! This post visualizes how large its bias is and shows how to fix it.

A golang implementation of dispatcher-worker pattern with errgroup. It immediately cancels the other jobs when an error occurs in any goroutine.

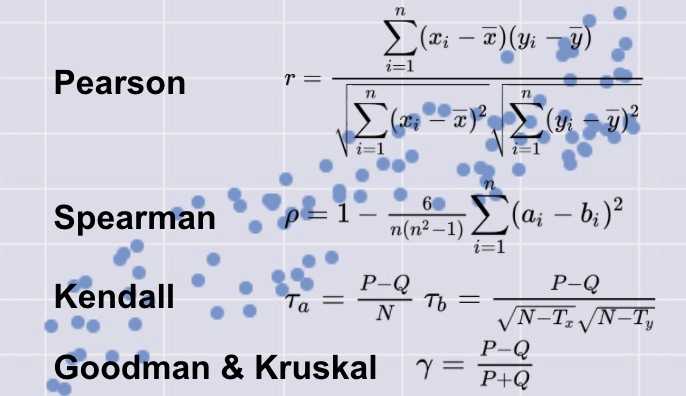

The correlation coefficient is a familiar statistic, but there are several variations whose differences should be noted. This post recaps the definitions of these common measures.

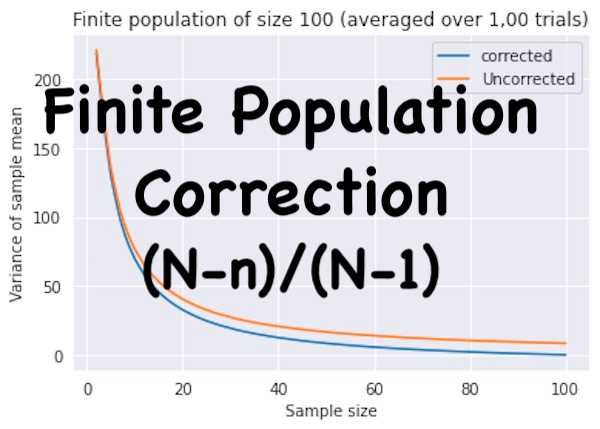

When you sample from a finite population without replacement, beware the finite population correction. The samples are not independent of each other.

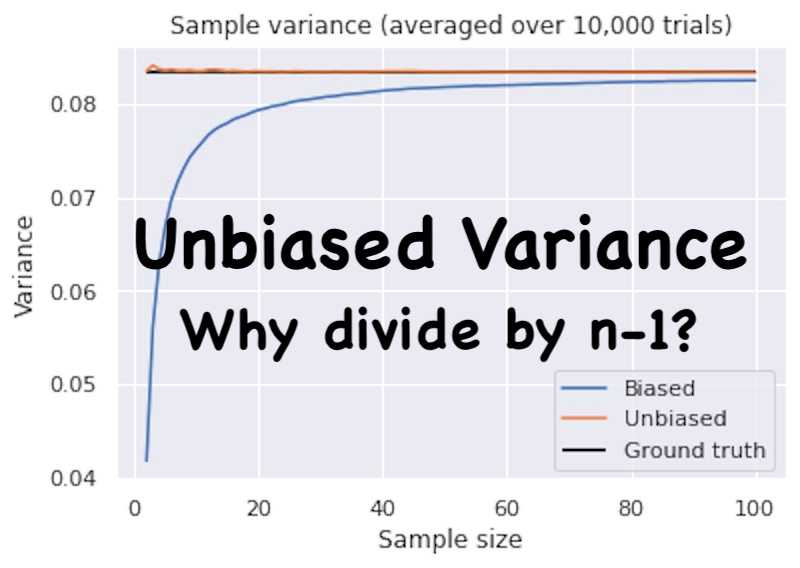

What is unbiased sample variance? Why divide by n-1? With a little programming with Python, it's easier to understand.

Let's re-inplement face swapping in 10 minutes! This post shows a naive solution using a pre-trained CNN and OpenCV.

NeurIPS 2020 virtual conference was full of exciting presentations! Here I list some notable ones with brief introductions.

Let's look back on the machine learning papers published in 2020! This post covers 10 representative papers that I found interesting and worth reading.

Lightweight GAN has opened the way for generating fine images with ~100 training samples and affordable computing resources. This post presents "This Sushi Does Not Exist" and how I built it with GAE.

If you want to use a custom loss function with a modern GBDT model, you'll need the first- and second-order derivatives. This post shows how to implement them, using LightGBM as an example

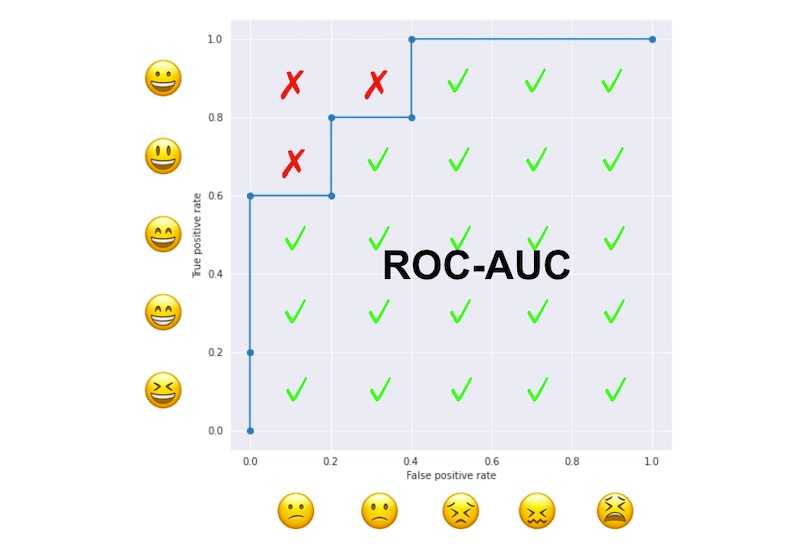

How come ROC-AUC is equal to the probability of a positive sample ranked higher than negative ones? This post provides an answer with a fun example.

Transformer has undergone various application studies, model enhancements, etc. This post aims to provide an overview of these studies.

This post introduces how to sample groups from a dataset, which is helpful when you want to avoid data leakage.

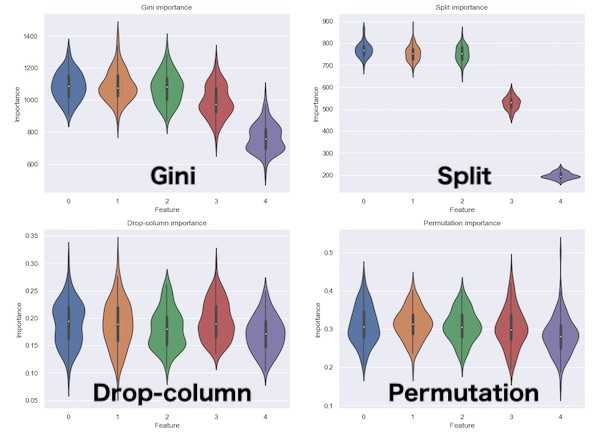

This post compares the behaviors of different feature importance measures in tricky situations.

This post introduces the Pandas method of `query`, which allows us to query dataframes in an SQL-like manner.

This is a golang sample code that calls some function periodically for a specified amount of time.

This post introduces PFRL, a new reinforcement learning library, and uses it to learn to play the Slime Volleyball game on Colaboratory.

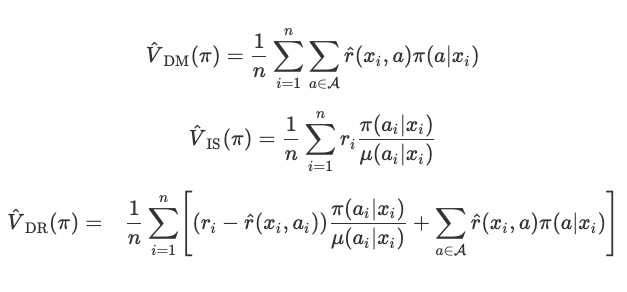

Causal inference is becoming a hot topic in ML community. This post formulates one of its important concepts called doubly robust estimator with simple notations.

This post introduces how to count page views and show popular posts in the sidebar of Gatsby Blog. Google Analytics saves you the trouble of preparing databases and APIs.

This post summarizes how to group data by some variable and draw boxplots on it using Pandas and Seaborn.

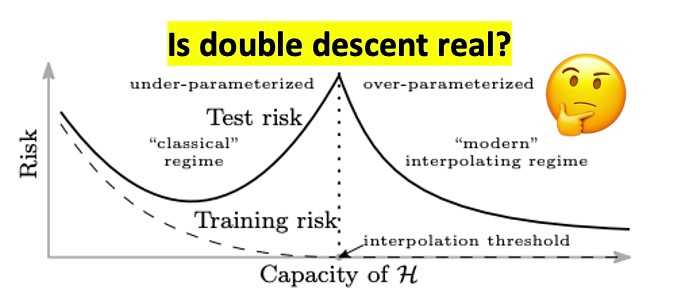

Double descent is one of the mysteries of modern machine learning. I reproduced the main results of the recent paper by Nakkiran et al. and posed some questions that occurred to me.

This post explains how MobileBERT succeeded in reducing both model size and inference time and introduce its implementation in TensorFlow.js that works on web browsers.

Stuck in an error when enabling Gatsby incremental builds on Netlify? This post might help it.

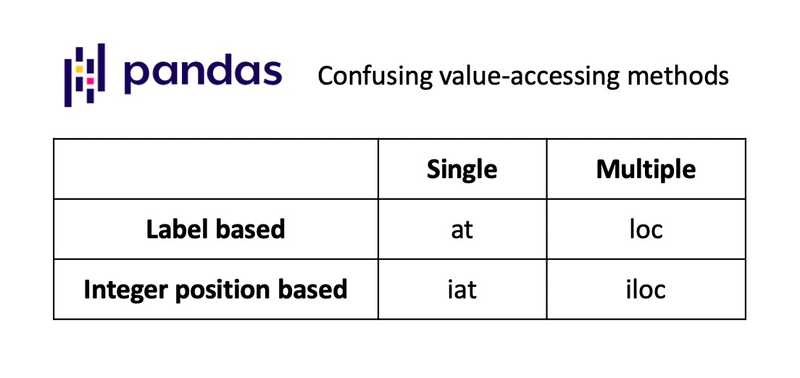

Have you ever confused Pandas methods `loc`, `at`, and `iloc` with each other? It's no more confusing when you have this table in mind.

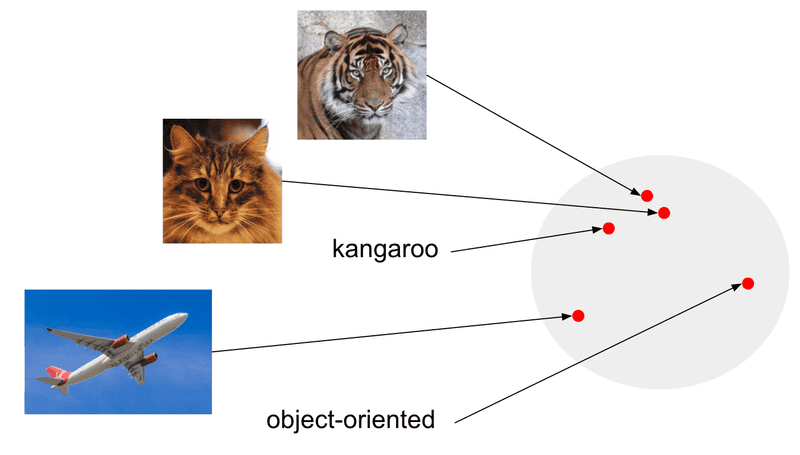

"Representation" is a way AIs understand the world. This post is a short introduction to the representation learning in the "deep learning era."

This post introduces how to put arranged SNS share buttons for Gatsby blog posts.

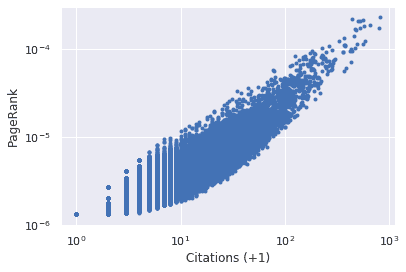

How does Google's PageRank work? Its theory and algorithm are explained, followed by numerical experiments.

Want to generate realistic images with a single GPU? This post demonstrates how to downsize StyleGAN2 with slight performance degradation.

Citation counts shouldn't be the only measurement of the impact of academic papers. I applied Google's PageRank to evaluating academic papers's importance.

This post introduces how to make top navigation bar with background image for Gatsby blog.