Aligning LLMs without Paired Preference Labels

May 08, 2024 | 3 min read | 279 views

In the previous post, I demonstrated how to align large language models (LLMs) without the need for complex reinforcement learning, using direct preference optimization (DPO). Since DPO was proposed, many improvements have been made, and one of them is Kahneman-Tversky optimization (KTO). This innovative method simplifies the alignment process even further by utilizing unpaired binary labels —gathered simply through thumbs-up or thumbs-down responses— instead of the more cumbersome pairwise preferences.1 This change significantly eases the data collection process, making it faster to align LLMs effectively. Today, I’ll show you how to use KTO to create the Reviewer #2 Bot from TinyLlama, just like I did with DPO in the previous post.

Similarly to the post about DPO, all the artifacts of this project are publicly accessible:

- Dataset shionhonda/reviewer2-2k-unpaired

- Model shionhonda/tiny-llama-reviewer2-1.1B-kto-lora

- Training script

- Training log

Also, if you are interested in the theory behind KTO, I recommend reading the original paper. In short, KTO uses a loss function representing the value relative to a reference point, which is inspired by the prospect theory of Daniel Kahneman and Amos Tversky. As an additional benefit, KTO can align LLMs without applying supervised fine-tuning (SFT) when they are sufficiently good.

Setup

In this experiment, I used the following resources:

- Hardware: Colab L4 instance (22.5GB VRAM)

- Pretrained model: TinyLlama-1.1B-Chat

- Dataset: shionhonda/reviewer2-2k-unpaired

- KTO trainer: TRL v0.8.5

- LoRA: PEFT v0.10.0

Dataset

As I mentioned earlier, KTO doesn’t require pairwise preferences. The KTO trainer works with a standard dataset that contains 3 columns:

- prompt: input text

- completion: output text

- label: boolean value representing if the completion is preferred or not

I created a dataset of this format (reviewer2-2k-unpaired) by unpairing the dataset for DPO (reviewer2-1k-paired). See the previous post for details about how I created the paired dataset.

KTO Training

Next, I ran the KTO trainer with the following configuration:

class Config:

beta = 0.1 # the beta parameter for KTO loss

desirable_weight = 1.0

undesirable_weight = 1.0

learning_rate = 5e-4

lr_scheduler_type = "cosine"

optimizer_type = "paged_adamw_32bit"

batch_size = 16

lora_alpha = 16

lora_dropout = 0.05

lora_r = 8

max_prompt_length = 256

max_length = 128

num_train_epochs = 8The entire script is here and the training logs are here.

Results

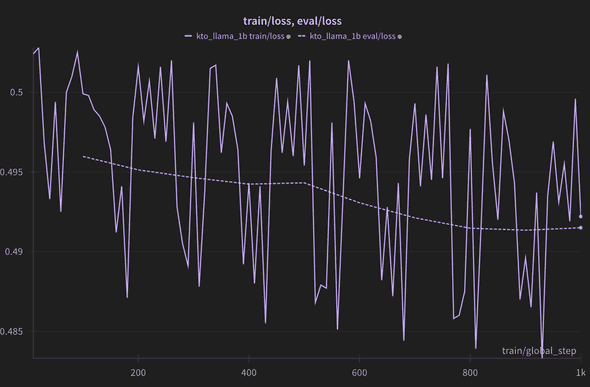

Here are the learning curves:

They look good as the validation loss is decreasing and the reward is increasing steadily. This is notably different from the DPO training in my previous post, where the they were fluctuating.

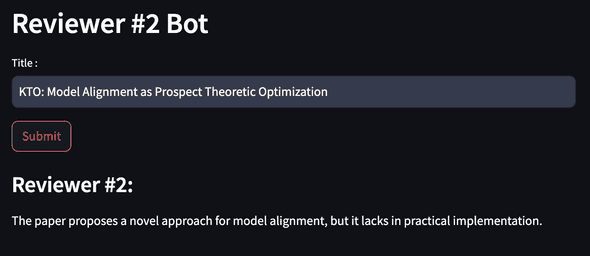

The KTO-trained model looks better than the DPO-trained model qualitatively as well. You can see it by chatting with the bot here. For busy readers, I share a sample output here:

I speculate that KTO worked better than DPO because KTO is more robust to the noisy labels than DPO. According to the original paper:

our theoretical analysis suggests that if your preference data has sufficiently little noise and sufficiently little intransitivity, then DPO will work better, since there is some risk of KTO underfitting. But if there is enough noise and transitivity, then the better worst-case guarantees of KTO will win out.

In our setting, some of the data (but not their labels) are supposed to be noisy because I faked the prompts when creating the dataset. KTO is better at ignoring the noise than DPO, which can explain why KTO worked better in this case.

Conclusion

In this article, I showed how to align LLMs without paired preference data using KTO. As paired preference data is harder to collect than unpaired data, I believe that we will see more and more use cases of KTO in the near future. I hope this article helps you create your own KTO-trained LLMs!

-

↩

Typically, you need to show two variations of outputs to the user and ask them which one they prefer.

![[object Object]](/static/2d0f4e01d6e61412b3e92139e5695299/e9fba/profile-pic.png)

Written by Shion Honda. If you like this, please share!