Stats with Python: Sample Correlation Coefficient is Biased

February 24, 2021 | 6 min read | 776 views

Have you ever wondered how much bias the sample correlation coefficient has with respect to the population correlation coefficient ? In fact, even if the sample size is about 20, there will be a bias of up to 5%, depending on the value of . This post visualizes how large the bias is and shows how to fix it.

Definition: Pearson’s r

As already discussed in the previous post, Pearson product-moment correlation coefficient, or simply Pearson’s r of the paired sequences is given as:

where and are the sample means. This is the sample correlation coefficient. For a population, the population correlation coefficient of the paired random variables and is defined as:

Pearson’s r For a Sample is Biased!

So, is the sample correlation coefficient an unbiased estimator of the population correlation coefficient ? Unfortunately, the answer is no. is only asymptotically unbiased, so when the sample size is small, you need to care about its bias.

In this post, I assume the data and follow a bivariate normal distribution and experiment with unbiased estimators of the correlation coefficient. Then, the exact density function is given as:

where is the gamma function and is the Gaussian hypergeometric function.

From the above scary-looking equation, Olkin et al. [1] derived the unique minimum variance unbiased estimator (MVUE):

This formula is too complicated, so there is an approximated version:

How large is the bias of the sample correlation coefficient? How good is the approximation? To answer these questions, I conducted some experiments in the next section.

Experiment

The code I used for the experiments below is available at Colaboratory.

I obtained correlated sequences and their correlation coefficients in the following way:

- Draw samples from

- Draw samples from

- Given (), obtain samples by

- Compute the biased, the unbiased, and the adjusted correlation coefficients

Here, determines the correlation between and . That is, and have a perfect correlation when , and they have a zero correlation when . Formally, we have

Therefore, the population correlation coefficient is expressed as a function of :

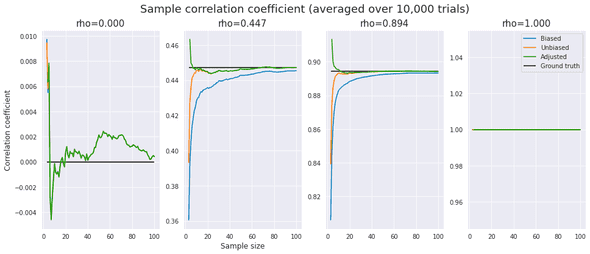

First, for different values of and (sample size), I plotted the biased, the unbiased, and the adjusted correlation coefficients to see their bias from the population statistic and their asymptotic behavior. To alleviate the effects of stochastic noise, I took an average over 10,000 trials. For calculating , scipy provides hyp2f1 function.

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import scipy.special as sc

from tqdm.notebook import tqdm

sns.set_style("darkgrid")

def gen_data(p=0.5, n=100, k=10000):

x = np.random.randn(n, k)

e = np.random.randn(n, k)

y = p * x + (1-p) * e

biased = []

unbiased = []

adjusted = []

for i in tqdm(range(3, n+1)):

r_biased = []

r_unbiased = []

r_adjusted = []

for j in range(k):

r = np.corrcoef(x[:i, j], y[:i, j])[0, 1]

r_biased.append(r)

r_unbiased.append(r * sc.hyp2f1(0.5, 0.5, (i-1)/2, 1-r**2))

r = r * (1 + (1 - r**2) / (2 * (i-3)))

r_adjusted.append(r)

biased.append(np.mean(r_biased))

unbiased.append(np.mean(r_unbiased))

adjusted.append(np.mean(r_adjusted))

return biased, unbiased, adjusted

def population_r(p):

return p / (2*p**2 - 2*p + 1)**0.5

ps = [0, 1/3, 2/3, 1]

fig = plt.figure(figsize=(16,6))

for i,p in enumerate(ps):

ax = fig.add_subplot(1, 4, i+1)

biased, unbiased, adjusted = gen_data(p, n=n, k=10000)

rho = population_r(p)

x = np.arange(3, n+1)

ax.plot(x, biased, label="Biased")

ax.plot(x, unbiased, label="Unbiased")

ax.plot(x, adjusted, label="Adjusted")

ax.hlines(rho, x[0], x[-1], label="Ground truth")

if i==1:

ax.set_xlabel("Sample size", fontsize=12)

if i==0:

ax.set_ylabel("Correlation coefficient", fontsize=12)

if i==3:

ax.legend()

ax.set_title(f"rho={rho:.3f}", fontsize=15)

fig.suptitle("Sample correlation coefficient (averaged over 10,000 trials)", fontsize=18)

plt.show()One can observe the following:

- The “biased estimator” is indeed asymptotically unbiased, but the bias remains non-subtle even when .

- It looks like neither the “unbiased” estimator nor the “adjusted” estimator is completely unbiased. Still, they are significantly more accurate than the biased estimator.

- The bias depends on as well as . It seems that the smaller is, the larger the relative bias is.

- When (perfect correlation), .

- When (zero correlation), .

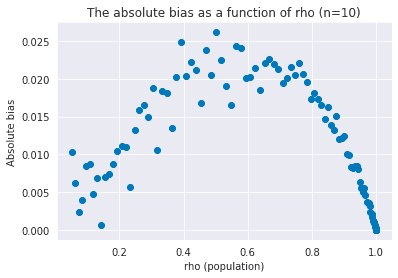

To better picture how the bias changes according to , let’s think about absolute bias for the case where . This is untractable due to the hypergeometric term, so I consider the approximated version:

The absolute bias reaches its maximum when (i.e. ) because:

The numerical experiment produced the expected result.

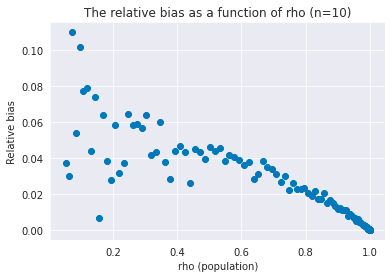

In a similar way, the approximated relative bias takes its maximum value when .

Conclusion

- The sample correlation coefficient is not an unbiased estimator of the population correlation coefficient . The bias remains untrivial when

- For the data that follow a bivariate normal distribution, the exact form of minimum variance unbiased estimator is known

- The approximated version is accurate enough and far handier

- The smaller is, the larger the relative bias of is

References

[1] Ingram Olkin, John W. Pratt. ”Unbiased Estimation of Certain Correlation Coefficients“. Ann. Math. Statist. 1958.

[2] Pearson correlation coefficient - Wikipedia

![[object Object]](/static/2d0f4e01d6e61412b3e92139e5695299/e9fba/profile-pic.png)

Written by Shion Honda. If you like this, please share!