Stats with Python: Finite Population Correction

January 29, 2021 | 6 min read

It is a common mistake to assume independency between samples from a finite population without replacement. This can lead to mis-estimation of, for example, the variance of the sample mean.

Consider you have samples , which are sampled without replacement from a finite population with mean and variance , and you want to estimate the mean and variance of the sample mean . As in the previous post, the sample mean is defined as:

It must be noted that the samples are identically distributed but not independent of each other. For example, if , is sampled from . In this case, the mean of the sample mean is the same as for the sampling with replacement.

However, the variance of sample mean is not :

The factor is called finite population correction. When , this factor approaches and can be ignored. But when is sufficiently large compared to . In this post, I’ll visualize the effect of finite population correction and then give a brief proof for the above formulae.

Visualizing Finite Population Correction

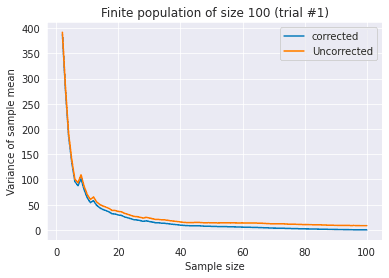

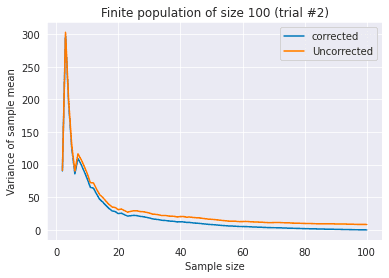

Consider a finite population and samples without replacement from this population. In the following two figures, I plot the corrected variance of sample mean and uncorrected version against different sample sizes , with different random seeds.

In both figures, we see that as the sample size grows, the variance of sample mean approches , as argued in the law of large numbers. Specifically, when , the corrected variance is exactly equal to . This is natural considering that the mean of samples is always . Uncorrected variance does not satisfy this condition, so now it’s clear that you should use finite population correction when the population is finite and samples are without replacement.

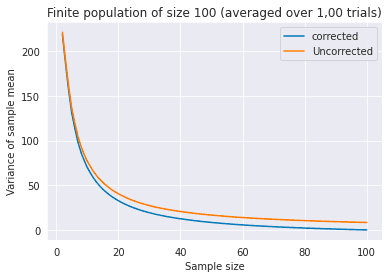

Using the following code, I repeated this experiment 1,000 times and plotted the average values in the following figure. The difference of corrected and uncorrected variance is clearer here.

N = 100

corrected_vars = []

uncorrected_vars = []

for _ in range(1000):

rands = np.random.choice(N, N, replace=False)

corrected = []

uncorrected = []

for n in range(2, N+1):

var_of_mean = np.var(rands[:n])/n

corrected.append(var_of_mean*(N-n)/(N-1))

uncorrected.append(var_of_mean)

corrected_vars.append(corrected)

uncorrected_vars.append(uncorrected)

x = np.arange(2, N+1)

plt.plot(x, np.mean(corrected_vars, axis=0), label="corrected")

plt.plot(x, np.mean(uncorrected_vars, axis=0), label="Uncorrected")

plt.xlabel("Sample size")

plt.ylabel("Variance of sample mean")

plt.legend()

plt.title("Finite population of size 100 (averaged over 1,00 trials)");Proof

The mean of the sample mean is the same as in the case of sampling with replacement.

The variance of sample has an additional term .

Here,

Thus,

Intuition

The uncorrected version does not take the covariance term , which is negative, into account. This leads to the overestimation of the variance.

References

[1] 東京大学教養学部統計学教室 編. ”統計学入門“(第9章). 東京大学出版会. 1991.

[2] Fernando Tusell. ”Finite Population Sampling”. 2012.

![[object Object]](/static/2d0f4e01d6e61412b3e92139e5695299/e9fba/profile-pic.png)

Written by Shion Honda. If you like this, please share!