Aligning LLMs without Reinforcement Learning

April 27, 2024 | 3 min read | 95 views

About a year ago, I discussed reinforcement learning from human feedback (RLHF) on this blog, showcasing its practical applications. However, the tech landscape evolves rapidly, and a few months later, we witnessed the emergence of a groundbreaking method known as direct preference optimization (DPO). DPO simplifies the process of aligning large language models (LLMs) by eliminating the need for reinforcement learning, which is often complex. Thus, many developers such as Mistral AI 1 and Meta 2 have already adopted DPO to train their LLMs.

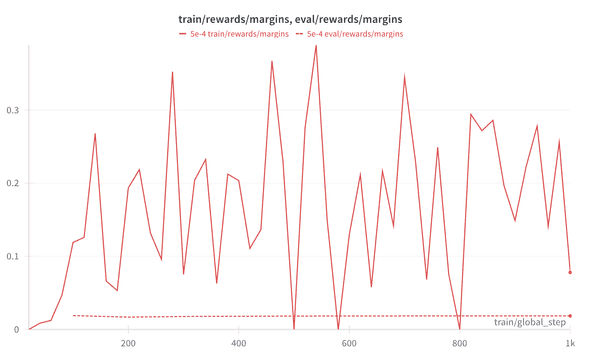

In this article, I will explain how to use DPO to create your very own LLM, like the one I created: the Reviewer #2 Bot from TinyLlama. This bot gives a bitter review fn any paper you submit.3 Curious to see it in action? Check it out on Hugging Face Spaces.

For your reference, all the artifacts of this project are publicly accessible:

- Dataset shionhonda/reviewer2-1k-paired

- Model shionhonda/tiny-llama-reviewer2-1.1B-dpo-lora

- Training script

- Training log

Also, if you are interested in the theory behind DPO, I recommend reading the original paper. Simply put, the authors derived an optimal policy corresponding to the reward model in a closed form and found a way to solve the RLHF problem with a classification loss.

Setup

In this experiment, I used the following resources:

- Hardware: Colab L4 instance (22.5GB VRAM)

- Pretrained model: TinyLlama-1.1B-Chat

- Dataset: shionhonda/reviewer2-1k-paired

- DPO trainer: TRL v0.8.5

- LoRA: PEFT v0.10.0

Preference Dataset

The DPO trainer requires a preference dataset that contains 3 columns:

- prompt: input text

- chosen: preferred output text

- rejected: non-preferred output text

To quickly build a dataset of this format (reviewer2-1k-paired), I took a creative route:

- Collect 1,100 titles from NeurIPS 2023 accepted papers

- Ask TinyLlama to generate negative reviews about the papers by literally asking “generate negative reviews” with examples

- Ask TinyLlama to generate positive reviews about the papers by literally asking “generate positive reviews” with examples

- Combine the two sets of outputs to create a preference dataset. Label negative reviews as “chosen” and positive reviews as “rejected”, and remove “positive” and “negative” from the prompts and interleave the positive and negative examples

- Set 1,000 pairs for training and 100 pairs for validation

Let me explain more about the trick used in the prompt. For example, we can generate a negative review by this prompt:

Generate a negative review about the paper <Title>.

Example 1: This paper is not well-written.

Example 2: The paper lacks novelty.

Your review:And a positive review by this prompt:

Generate a positive review about the paper <Title>.

Example 1: This paper is well-written.

Example 2: The paper is novel.

Your review:In the preference dataset, we can synthesize a prompt by combining them:

Generate a review about the paper <Title>.

Example 1: This paper is not well-written.

Example 2: The paper is novel.

Your review:This way, we can avoid the tedious work of manually evaluating 1,100 pairs to see which is positive or negative. In theory, it breaks the assumption of DPO because the values in the “prompt” column are supposed to be the same ones as the ones used to generate the outputs in the “chosen” and “rejected” columns. However, as we will see in the following part of this article, I found that the model can still learn to generate negative reviews from this synthetic dataset.

DPO Training

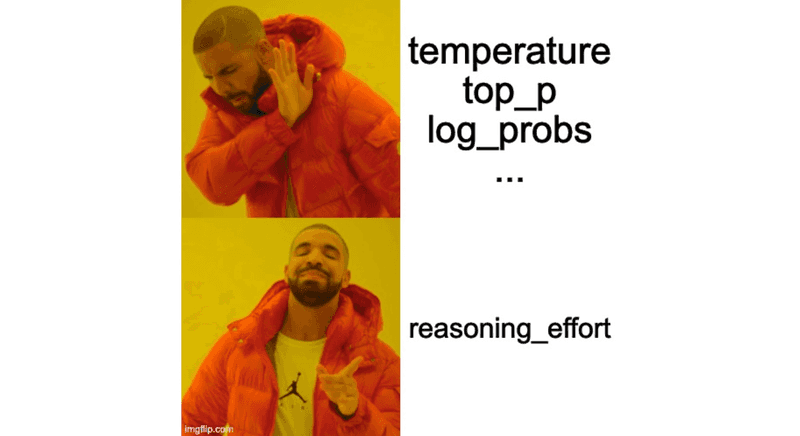

Next, I ran the DPO trainer with the following configuration:

class Config:

beta = 0.1 # the beta parameter for DPO loss

learning_rate = 5e-4

lr_scheduler_type = "cosine"

optimizer_type = "paged_adamw_32bit"

batch_size = 10

lora_alpha = 16

lora_dropout = 0.05

lora_r =8

max_prompt_length = 256

max_length = 128

max_steps = 2000The entire script is here and the training logs are here.

Results

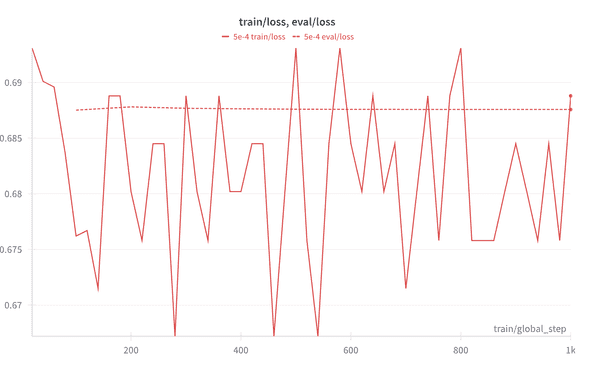

Here are the learning curves:

Well, it doesn’t seem to work well. The training loss keeps fluctuating and the validation loss is not decreasing at all. We observe the similar behavior for the reward as well. I tried different hyperparameters but the results were similar. I suspect that this is because I faked the prompts in the preference dataset. However, when we look at the generated outputs, they are not bad! Here is an example:

Yes, this is a harsh review that I would expect from Reviewer #2!

Conclusion

DPO is a novel approach to align LLMs without reinforcement learning and already adopted by many successful LLMs. In this example, I showed how to train Reviewer #2 Bot with DPO. The results were not great, but the generated outputs show signs of success.

If you are interested in training larger models, I also recommend this article. The authors trained a 7B-parameter model with DPO here. Also, if your dataset is not paired, you can use a method called Kahneman-Tversky Optimization (KTO). TRL already supports KTO so you can try it out. 4

I hope this article helps you create your own DPO-trained LLMs!

![[object Object]](/static/2d0f4e01d6e61412b3e92139e5695299/e9fba/profile-pic.png)

Written by Shion Honda. If you like this, please share!