読んだ本とともに2025年を振り返る

January 05, 2026 | 11 min read2025年に読んだ本を振り返ります。

Under the sea, in the hippocampus's garden...

![[object Object]](/static/2d0f4e01d6e61412b3e92139e5695299/e9fba/profile-pic.png)

2025年に読んだ本を振り返ります。

2025年に注目を集めた5本の研究を取り上げ、推論を引き出す強化学習、エージェント評価、長期タスク指標、幻覚の統計的説明などの要点と限界を整理します。

This article picks five notable AI papers from 2025 and summarizes their key ideas and limitations, including reinforcement learning for reasoning, agent benchmarks, long‑task metrics, and a statistical explanation of hallucinations.

AIによって知識がコモディティ化していく時代において、人間の価値がどこで生まれるのかを、フロンティア知識と行動のプレミアムという観点から整理しました。

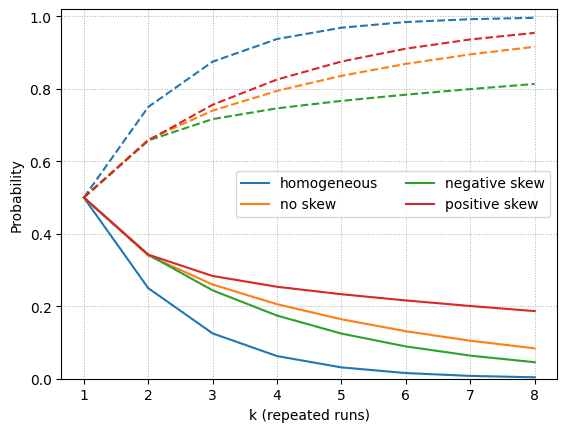

These metrics capture coverage and reliability.

Some LLMs disable sampling knobs like temperature and top_p. Here’s why.

A deep dive into how LLMs serialize prompts, output schemas, and tool descriptions into a token sequence, with examples from Llama 4's implementation.

『アンネの日記』をGPT-4.1で日本語に翻訳しました。

『コンピュータの構成と設計』(通称「パタヘネ本」)のまとめと感想です。

A deep dive into how databases work.