Aligning LLMs without Reinforcement Learning

April 27, 2024 | 3 min readDPO reduces the effort required to align LLMs. Here is how I created the Reviewer #2 Bot from TinyLlama using DPO.

Under the sea, in the hippocampus's garden...

![[object Object]](/static/2d0f4e01d6e61412b3e92139e5695299/e9fba/profile-pic.png)

DPO reduces the effort required to align LLMs. Here is how I created the Reviewer #2 Bot from TinyLlama using DPO.

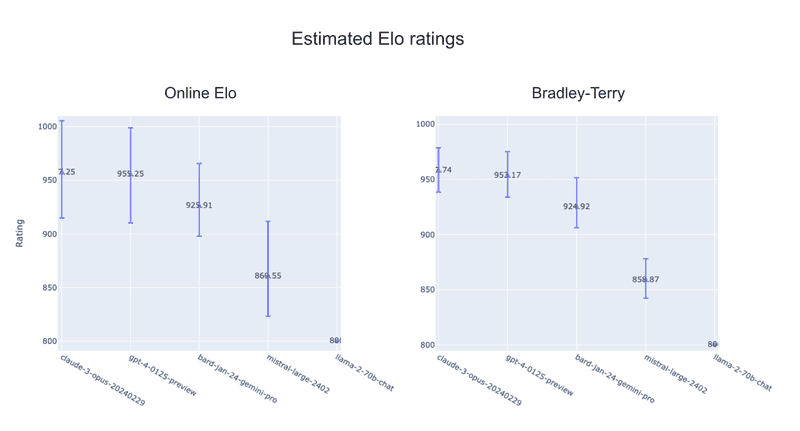

Chatbot Arena updated its LLM ranking method from Elo to Bradley-Terry. What changed? Let's dig into the differences.

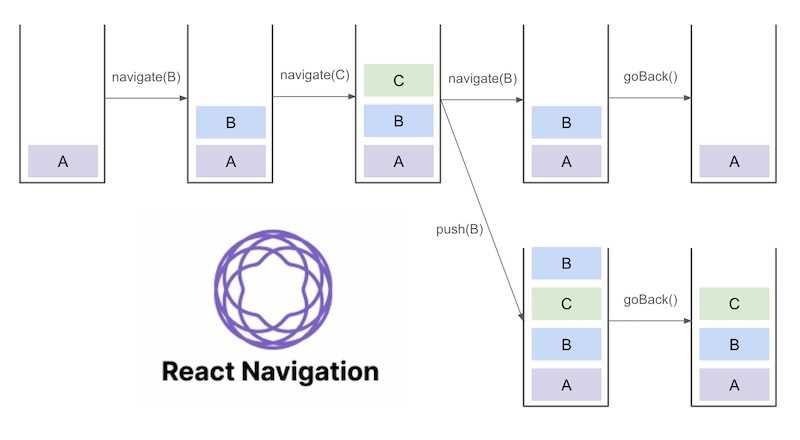

Does your React Native app go back to an unexpected screen? Here's how to deal with it.

『デュアルキャリア・カップル』を読んで、「第一の転換期」を乗り越えるために夫婦で話したことについて書きます。

Let's look back at the significant progress made in deep learning in 2023! Here are my 10 favorite papers.

Can LLMs answer scientific questions? See how Kaggle winners used LLMs and RAG!

Discover the power of Flask's Server-Sent Events for better developer's experience of chatbots.

What I cannot create, I do not understand. Let's train your own LLM!

フランスのスタートアップでソフトウェアエンジニアとして働くことになったので、そのときの体験について書きます。