On Optimal Threshold for Maximizing F1 Score

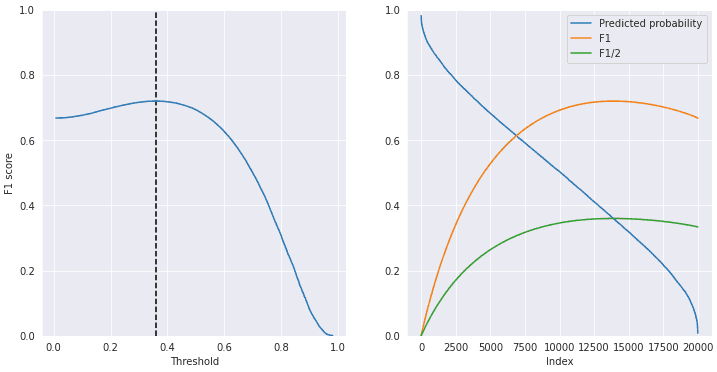

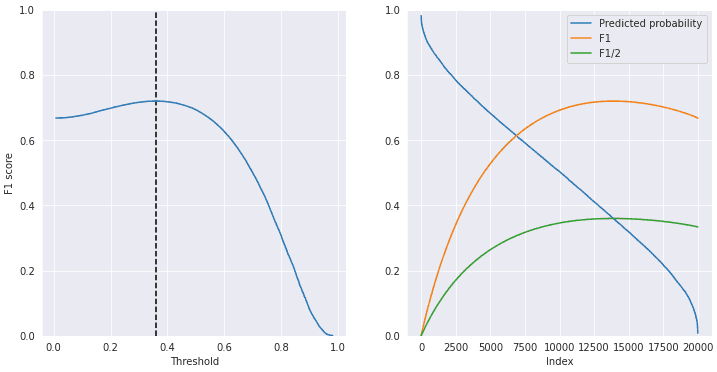

May 15, 2021 | 9 min readThis post attempts to take a deeper look at F1 score. Do you know that, for calibrated classifiers, the optimal threshold is half the max F1? How come? Here it's explained.

Under the sea, in the hippocampus's garden...

![[object Object]](/static/2d0f4e01d6e61412b3e92139e5695299/e9fba/profile-pic.png)

This post attempts to take a deeper look at F1 score. Do you know that, for calibrated classifiers, the optimal threshold is half the max F1? How come? Here it's explained.

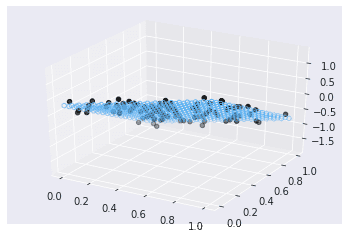

This post steps forward to multiple linear regression. The method of least squares is revisited --with linear algebra.

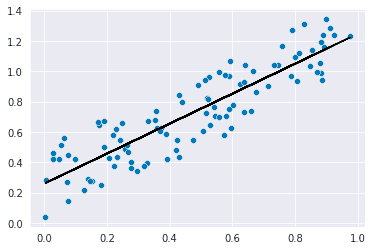

This post summarizes the basics of simple linear regression --method of least squares and coefficient of determination.

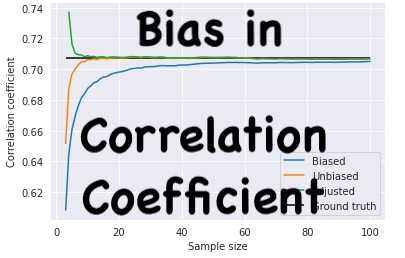

Is the sample correlation coefficient an unbiased estimator? No! This post visualizes how large its bias is and shows how to fix it.

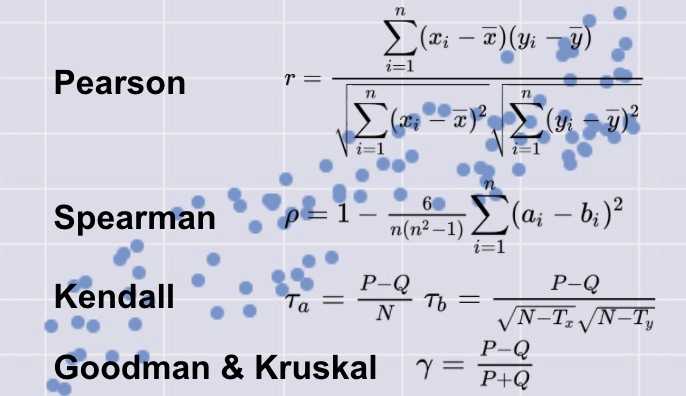

The correlation coefficient is a familiar statistic, but there are several variations whose differences should be noted. This post recaps the definitions of these common measures.

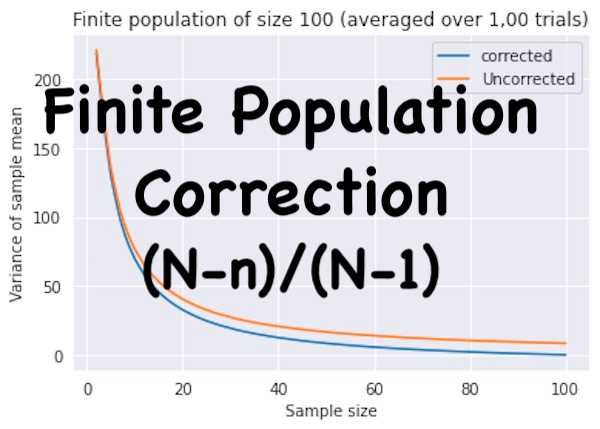

When you sample from a finite population without replacement, beware the finite population correction. The samples are not independent of each other.

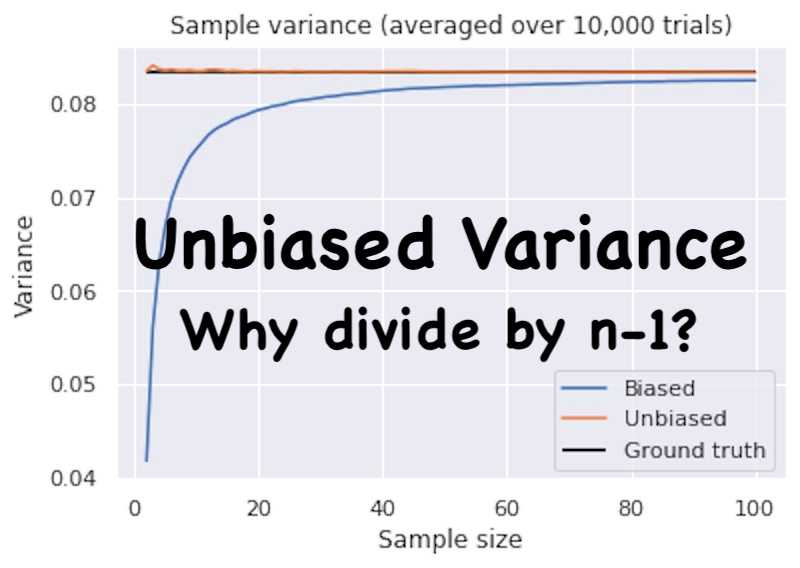

What is unbiased sample variance? Why divide by n-1? With a little programming with Python, it's easier to understand.

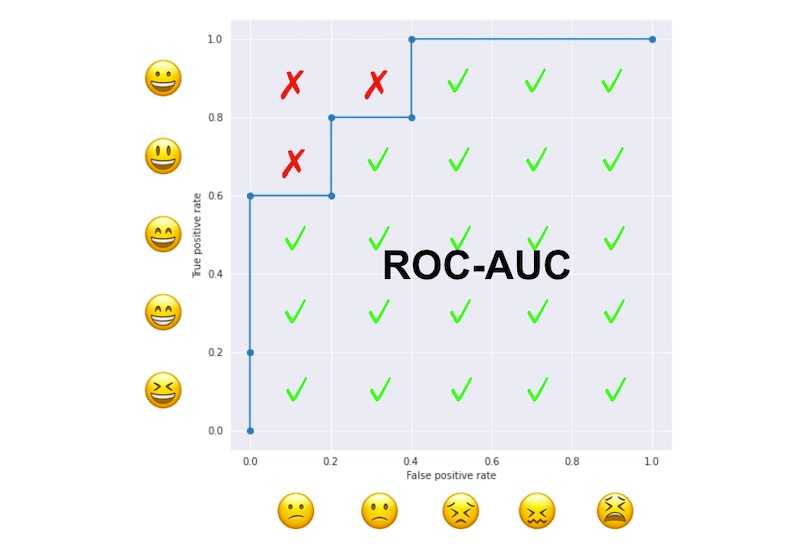

How come ROC-AUC is equal to the probability of a positive sample ranked higher than negative ones? This post provides an answer with a fun example.